Intro to Progressive Analytics #2: Sources and Pipelines

Data is more siloed than ever before. Three quarters of companies use all or mostly SaaS technologies to run their business, and the average mid-sized company uses dozens of SaaS applications to run their business. Add to that internal databases and all the spreadsheets used to make decisions. To say data is siloed is an understatement.

Most companies struggle with analytics, unsurprisingly. Data scattered everywhere makes it easy to get disorganized quickly. 80% of analytics efforts never deliver business value, and a big reason is the complexity of so many sources.

Many commercial and open source tools have risen to extract data from these sources. The largest claim to be able to extract data from over 200 SaaS products. Meanwhile, estimates for the total number of SaaS products on the market range from 10,000 and up.

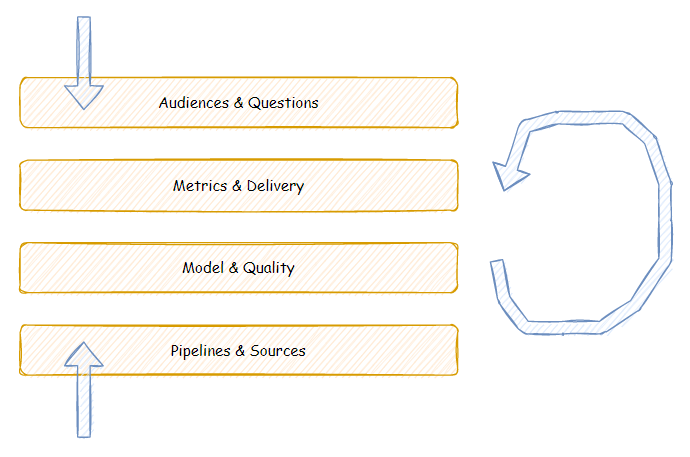

Progressive Analytics is a framework that strives to achieve value from analytics quickly and sustainably. In this post I discuss Sources & Pipelines. I discussed in an earlier article the benefits of a simple, proven framework to follow. Progressive Analytics is designed on principles of agile and lean, tailored to the modern data architecture. In this article we talk about the bottom layer, Pipelines and Sources.

Layer Four: Pipelines & Sources

Data Pipeline simply refers to something that moves data from one place to another. Many ways exist to implement a pipeline, some of which offer more flexibility, reliability, observability, etc than others.

A Data Source is a convenient, natural hierarchy to precisely refer to where data is coming from. In Progressive Analytics we use this hierarchy:

| Level | Description | Examples |

| Provider | The organization that owns or provides the data to your organization | |

| System | The product, API, or system that provides the data | Google AdsGoogle AnalyticsGoogle Sheets |

| Feed | Most often, systems provide multiple groupings of data. APIs provide separate endpoints for various resources, databases have separate tables, streams have topics, etc. | Google Ads AccountGoogle Ads CampaignGoogle Ads Ad GroupGoogle Ads Ad |

| Object | Whether batch or streamed, individual data records are always grouped/aggregated and stored in some sort of file or object after they are extracted and processed. Over time, a Feed will have more than one Object. | ad_extract_20210801.csv campaign_extract_20210801.csv |

| Record | A group of pre-determined fields. Although the structure may vary, typically it is fixed or mostly known in advance. | An individual row in a CSV file An individual record in a JSON file |

| Field | An individual attribute or property of a record. A group of fields are what make up a record. | ad.statusad.labelad.IDad.title |

These levels provide a nice abstraction that applies to any data from any source, and a simple way to understand the amount of work.

A sidenote on structured vs unstructured vs sem-istructured data. Most business analytics are driven by structured data, and most operational systems and SaaS products can provide structured data. Progressive Analytics (as well as most analytics efforts) assumes you are dealing with structured or mostly structured data, because a human can rationalize and analyze that data.

Whiteboard vs. Keyboard

In an earlier post I discussed the top layer, Audiences & Questions. It is important that scope and outcomes from this layer drive the Pipelines & Sources layer from the beginning, and that both workstreams happen in parallel.

Unfortunately, high-level, strategic conversations about an analytics effort are too far removed from the actual technical implementation. We call this whiteboard vs keyboard. Business context and nuance that are well understood during planning conversations are too often lost by the time the engineer sits down to configure a data pipeline or transformation.

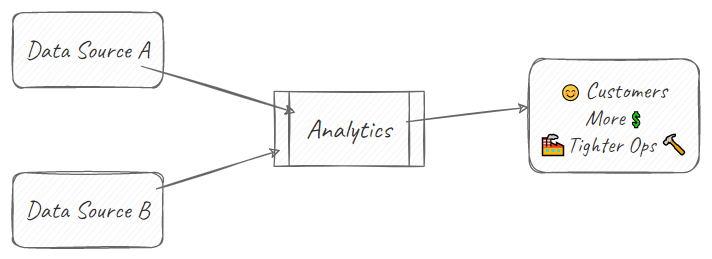

What is clear on a whiteboard sketch, like this one:

Is easy to lose sight of at the keyboard, because they are at such different levels:

Aligning the activities of the whiteboard and keyboard is why we advocate two parallel workstreams–one top-down, and the other bottom-up. Additionally, thin-slicing the amount of work to be done allows anyone, regardless of their level of operation, to keep all the relevant context and goals in mind.

When the conversation leads to adding just one more provider, feed, or even field, you must push hard to defer that work to a future iteration unless absolutely necessary.

Getting Organized

Below are the activities in a typical iteration.

Scope

The data sources in scope for any iteration should be only the data necessary to answer the in-scope questions identified in the top-down workstream. Defer all other providers, systems, feeds, etc until later.

Inventory

A complete inventory includes going through the levels Provider, System, and Feed.

Access

Gaining access is a technical exercise working to create API keys, firewall rules, or service accounts necessary to get at the data.

Flow

Above all else in the bottom-up workstream, get the data flowing into your data lake. Many pitfalls and gotchas might exist connecting to the source System and extracting data from it. Until the data is flowing from the source System into a data lake or some other storage location under your control, you have no way of knowing what stumbling blocks you may run into.

Remember in the ELT architecture, no transformation happens in this step. This keeps things simple to avoid adding yet another complexity to an already complicated activity.

Assess

Many exercises and tools exist to analyze, profile, and understand the characteristics of data. Some analysis is appropriate directly in the source System, such as understanding what Objects the System provides and what Fields are available in each Object. Most analysis is appropriate after data is under your control, for example:

- Do we have all the Fields we need to answer the Questions?

- Is the data complete or are there blank or null values?

- Are the patterns in the data expected?

- How prevalent are anomalies in the data?

Iterate

Future iterations (driven by new Questions or Metrics!) will entail adding Fields, Feeds, even new Providers, etc. By defining an iteration in terms of the Data Source hierarchy, you will be very clear on how much work is to be done and how long it will take.

Pipelines

A data Pipeline refers to the operations that move data. It is an automated, executable process that can be scheduled or triggered. Many techniques exist: homegrown, commercial products, and open source tools. Broadly, any system dealing with data pipelines will entail technology to cover the connections to the source systems, the actual movement of data, the storage once data is under your control, scheduling, and observability.

The scope of this post is not to analyze the technical options, but to acknowledge that this work is organized and performed at the lowest level of the Progressive Analytics framework, Sources & Pipelines. Datateer provides a Managed Analytics Platform, where all this is pre-built, packaged, and ready to go from day one. If you are starting from scratch, you may have some foundational work before diving into Progressive Analytics such as architecture design, process definition, vendor selection, and integration among tools.

See how Sources & Pipelines fit into the broader Progressive Analytics framework by exploring these related articles:

- Audiences & Questions

- Metrics & Delivery (coming soon)

- Model & Quality (coming soon)

- Sources & Pipelines