Selecting the Right Visualization Tool with Confidence

This article is part of our series Selecting the Right Visualization Tool with Confidence. Other articles in the series include:

- Selecting the Right Visualization Tool with Confidence

- Data Security and Compliance in Cloud-Native Business Intelligence

- Discovering the Analyst Experience and Impact on Data Democratization

Evaluating business intelligence tools is exhausting.

First of all, there are a ton of them on the market. The high-profile acquisitions of Tableau, Looker, Chartio, and Qlik must be inspiring to entrepreneurs who want to have similar exits. The field is crowded. Even after years of discovering vendors and evaluating their products, we still discover new products regularly.

Producing a basic tool–a UI on top of a visualization library–must be fairly easy, proven by how many are on the market right now. But building a solid product and company requires more than that. And so many nuances exist that can stop a report developer or designer in their tracks, or cause workarounds. How could you ever cover all those situations in your evaluation?

Every vendor’s message starts to sound the same, and is really some variation of how easy their tool will make the whole effort. Although 80% of the effort in data analytics efforts goes to data engineering and modeling, some BI tool vendors will tell you all of that can somehow magically go away.

From my experience, the BI tool is the tip of the spear or the top of the iceberg. It provides the visual culmination of all the work and analysis a company has put into their data platform–the presentation layer intended to produce something clean and useful.

Really, more attention should be paid to getting the data, analysis, and metrics right. However, because the charts and visuals are what most users will interact with, the BI tool you choose is critical.

This article is the first in a series that will share our direct experience, the experience of our customers, and contributions from the community in the Chartio migration research project (here and here).

Below we lay out the overall approach we have developed to compare apples to apples. And in the coming weeks we will have articles diving even deeper into aspects of a BI tool evaluation we have found to be important:

- Data Security & Compliance

- Visualization Capabilities & Dashboarding

- Self-Service Analysis and “Data Democratization”

- The Support Experience

- End User Experience (including Embedding)

- Pricing and Budgeting

- Performance

- The Intangibles

- Miscellaneous and Doing Too Much

A visual scoring system

In a crowded market you need some sort of way to compare things objectively. If you have ever had the experience of buying or renting a place to live, you have probably experienced house hunting fatigue. You look at so many homes, they all start to blur together. You can’t remember whether the kitchen you liked was a part of the first home you saw, or the second. Did you really like that 2nd house, or are you just tired when you see the 8th and want to make a decision?

That is the feeling we had when trying to make sure we were evaluating all the options.

To combat this many people make a list of features or other aspects of their evaluation, and then create a scoring or rating system. We started that way, too. But numbers in a spreadsheet only go so far.

Another thing we learned was this decision was not a one-time event. Each time we learned something new about any given tool, we found ourselves revisiting the spreadsheet to re-evaluate. We needed something that we could return to time and again, without wasting time rehashing things already discussed or re-orienting to a bunch of numbers.

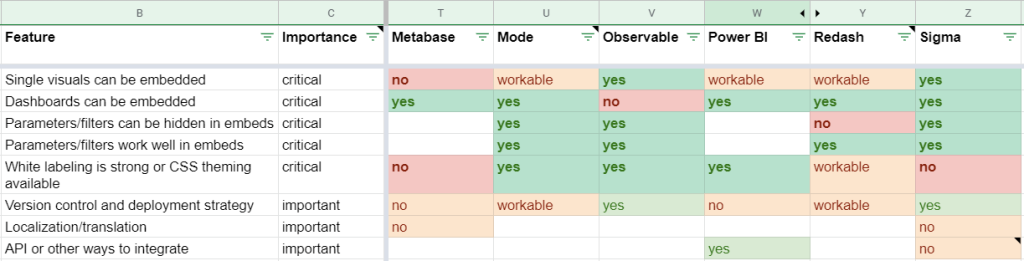

We are evaluating a visual tool, so why not do something visual? By giving each feature two ratings–importance and score–we were able to create a simple visual experience.

Here is a sample.

At a glance, we can:

- see the overall value of one tool against a field of competitors.

- focus in on a single feature and see how each tool compares.

- immediately identify any missing critical features or holes in our analysis.

Check out our Product Evaluation Matrix. Feel free to make a copy to get your own analysis started.

Not everything can be critical

Regardless of how you score or evaluate, each vendor is trying with all their might to be different from the others. So we end up with many features and variations of features, many of which are appealing.

But not everything can be of critical importance to your company. In a perfect world, you could enumerate the features you want, and someone would give you an order form and a price tag, and you are off to the races. But in an imperfect world like ours, you have to choose a tool that most closely matches your needs and desires.

It is the tendency of all us humans to overdo it and assume too many things are critical. At the extreme, if everything is equally weighted in your decision, you will end up with the most average product on the market, rather than the one that fits you best.

Recognize emotion and play the game

Buying decisions are emotional. Most of the decision is subconscious. According to the best research we have, emotion is what really drives the purchasing behaviors, and also, decision making in general. Experienced salespeople also know this, hence the saying, “sell the sizzle, not the steak.”

95% of thought, emotion, and learning occur in the unconscious mind–that is, without our awarenessGerald Zaltman, How Customers Think

We use objectivity and logic to talk ourselves into things. Recognize this about yourself and the dynamics of the evaluation and decision process.

Immediately after a good demo, you will have an affinity to the product that might stick. This is why every product company is willing to spend a lot of time demonstrating their product. Or, if the sales engineer had a bad day, it might cause you to see the product in less of a light.

To solve this problem:

- Understand the sales process, and roll with it: first they qualify your company, budget, and timing; they push a demo; they provide statistics or other sales collateral based on your specific concerns; they give a free trial; they push for a decision; you negotiate terms and sign the 12 or 36 month contract. If you aren’t ready to move to the next stage, tell them. If they understand where they stand in your evaluation, often they will offer things to help you move forward, such as a longer trial period.

- Eliminate early. If you limit your critical criteria and push those items in the early-stage calls, you can avoid spending time in product demos or trials for products that do not fit.

- Pace yourself. Recognize that your deadline should drive the number of products that progress to your later stages. Give yourself time to evaluate, but set a deadline to force decision and action.

- Use the evaluation spreadsheet. The visualization is intended to jar us visually–green good, red bad–and wake us up if we are being sucked in emotionally to a tool that objectively doesn’t hold up.

- Review regularly. Have a regular review of your criteria and ratings with a decision committee. At least involve a trusted advisor or just a second set of eyes so that you can see things clearly and objectively.

What happens if you make a bad decision?

I highlight this point specifically because we made the wrong choice prior to discovering Chartio, and had to live with the consequences. We also found that a completely exhaustive review of every single aspect of every single product that might just be the right one is totally impossible. Maybe you have that kind of time, and if so then use it. But if you are like us, you need to make a decision on somewhat incomplete information.

To mitigate the risk of a bad decision:

- Extended trials. If you have the time to invest in deeper proof-of-concept exercises, many vendors will extend their trial period if they know they are in the running in a small field of competitors. Remember the sales stages and play the game.

- Avoid doing too much in the tool. It is easy to forget that these feature-rich tools should be focused on presenting data. Doing too much data modeling or manipulation in the BI tool creates lock-in. Keeping these other responsibilities in your data warehouse prevents you from becoming overly dependent on the presentation tool. Focus on your critical criteria, and be selective about what makes something critical.

- Remember the implementation cost. Costs of standing up a new BI tool are usually about the same as the cost of the tool itself. So plan for 2x whatever the price tag before you will see any return on investment from the tool.

- Have backup plans. If the tool does not deliver as advertised, what then? Often in business there are less optimal ways to accomplish something that you can use as a plan B. For example, we often embed custom visualizations. Our plan B was modifying our custom visualization server to overcome some limitations of a product we chose. Our costs increased, but it wasn’t the end of the world.